Tech Giants Look to Curb AI's Energy Demands: The Kiplinger Letter

The expansion in AI is pushing tech giants to explore new ways to reduce energy use, while also providing energy transparency.

To help you understand how AI and other new technology are affecting energy consumption, trends in this space and what we expect to happen in the future, our highly experienced Kiplinger Letter team will keep you abreast of the latest developments and forecasts. (Get a free issue of The Kiplinger Letter or subscribe). You'll get all the latest news first by subscribing, but we will publish many (but not all) of the forecasts a few days afterward online. Here’s the latest…

The rise of AI is pushing tech giants to find new ways to curb energy use. Firms like Alphabet and Microsoft have always strived for energy efficiency, but AI chips are extra power-hungry and will be unsustainable without big changes.

Cue new tools that help users reduce energy usage. Tools being developed by researchers at the Massachusetts Institute of Technology (MIT) lower power needs with simple techniques, such as capping the amount of energy used by hardware.

Sign up for Kiplinger’s Free E-Newsletters

Profit and prosper with the best of expert advice on investing, taxes, retirement, personal finance and more - straight to your e-mail.

Profit and prosper with the best of expert advice - straight to your e-mail.

The researchers have found that such tweaks don’t hinder the AI’s performance. Another idea is optimizing the mix of AI chips with traditional ones for efficiency. Though it could take a while, expect more energy transparency around AI. Users will eventually get an energy report along with their answers from ChatGPT, which signals a trend of large-scale AI.

According to an article by Scientific American, a continuation of the current trends in AI capacity and adoption is set to lead to NVIDIA, a leader in AI computing, shipping 1.5 million AI server units per year by 2027. These 1.5 million servers, running at full capacity, would consume at least 85.4 terawatt-hours of electricity annually — more than what many small countries use in a year.

This forecast first appeared in The Kiplinger Letter, which has been running since 1923 and is a collection of concise weekly forecasts on business and economic trends, as well as what to expect from Washington, to help you understand what’s coming up to make the most of your investments and your money. Subscribe to The Kiplinger Letter.

Related Content

- ChatGPT and Job Security: Is AI Coming for Your Job?

- AI Has Powerful Potential to Make Investing Decisions Easier

- We Don’t Have to Let AI Win

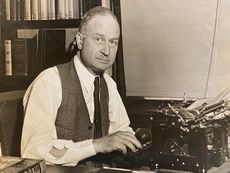

John Miley is a Senior Associate Editor at The Kiplinger Letter. He mainly covers technology, telecom and education, but will jump on other important business topics as needed. In his role, he provides timely forecasts about emerging technologies, business trends and government regulations. He also edits stories for the weekly publication and has written and edited e-mail newsletters.

He joined Kiplinger in August 2010 as a reporter for Kiplinger's Personal Finance magazine, where he wrote stories, fact-checked articles and researched investing data. After two years at the magazine, he moved to the Letter, where he has been for the last decade. He holds a BA from Bates College and a master’s degree in magazine journalism from Northwestern University, where he specialized in business reporting. An avid runner and a former decathlete, he has written about fitness and competed in triathlons.

-

-

Food Costs Are Up — Along With Promotions

Food Costs Are Up — Along With PromotionsWith food costs still high, you’re being more choosy about where to shop and dine. Supermarkets and restaurants are getting the message.

By Jamie Feldman Published

-

Giving to Charity This Holiday Season? Eight Tips for Doing It the Right Way

Giving to Charity This Holiday Season? Eight Tips for Doing It the Right WayMaximize your efforts by following these expert-recommended tips.

By Kiplinger Advisor Collective Published

-

Work Email Phishing Scams on the Rise: The Kiplinger Letter

Work Email Phishing Scams on the Rise: The Kiplinger LetterThe Kiplinger Letter Phishing scam emails continue to plague companies despite utilizing powerful email security tools.

By John Miley Published

-

New Employment Guidance Proposed on Hostile Work Practices: The Kiplinger Letter

New Employment Guidance Proposed on Hostile Work Practices: The Kiplinger LetterThe Kiplinger Letter New guidelines for employers fueled by a sharp increase in employment discrimination lawsuits in 2023.

By Matthew Housiaux Published

-

SEC Cracks Down on Misleading Fund Names: The Kiplinger Letter

SEC Cracks Down on Misleading Fund Names: The Kiplinger LetterThe Kiplinger Letter The SEC rules aim to crack down on so-called “greenwashing” — misleading or deceptive claims by funds that use ESG factors.

By Rodrigo Sermeño Published

-

As Tensions Rise, U.S. Imports From China Shrink: The Kiplinger Letter

As Tensions Rise, U.S. Imports From China Shrink: The Kiplinger LetterThe Kiplinger Letter China now accounts for less than 13.5% of American imports from abroad.

By Rodrigo Sermeño Published

-

Traffic Circles Can Make Intersections Safer, But Also Confusing: The Kiplinger Letter

Traffic Circles Can Make Intersections Safer, But Also Confusing: The Kiplinger LetterThe Kiplinger Letter In the U.S. traffic circles are on the rise — studies show that roundabouts, as they are commonly known, are safer than traditional intersections.

By Sean Lengell Published

-

Cargo Thefts on the Rise: The Kiplinger Letter

Cargo Thefts on the Rise: The Kiplinger LetterThe Kiplinger Letter Organized crime rings are targeting goods in shipments.

By David Payne Published

-

100 Years of The Kiplinger Letter: Centenary Special Edition

100 Years of The Kiplinger Letter: Centenary Special EditionThe Kiplinger Letter On September 29, 1923, the very first Kiplinger Letter was published.

By Jim Patterson Published

-

What the Charter, Disney Pay TV Battle Means for the Industry: The Kiplinger Letter

What the Charter, Disney Pay TV Battle Means for the Industry: The Kiplinger LetterThe Kiplinger Letter Charter called the pay TV model 'broken', during its battle with Disney. Is this a turning point for the television industry?

By John Miley Published